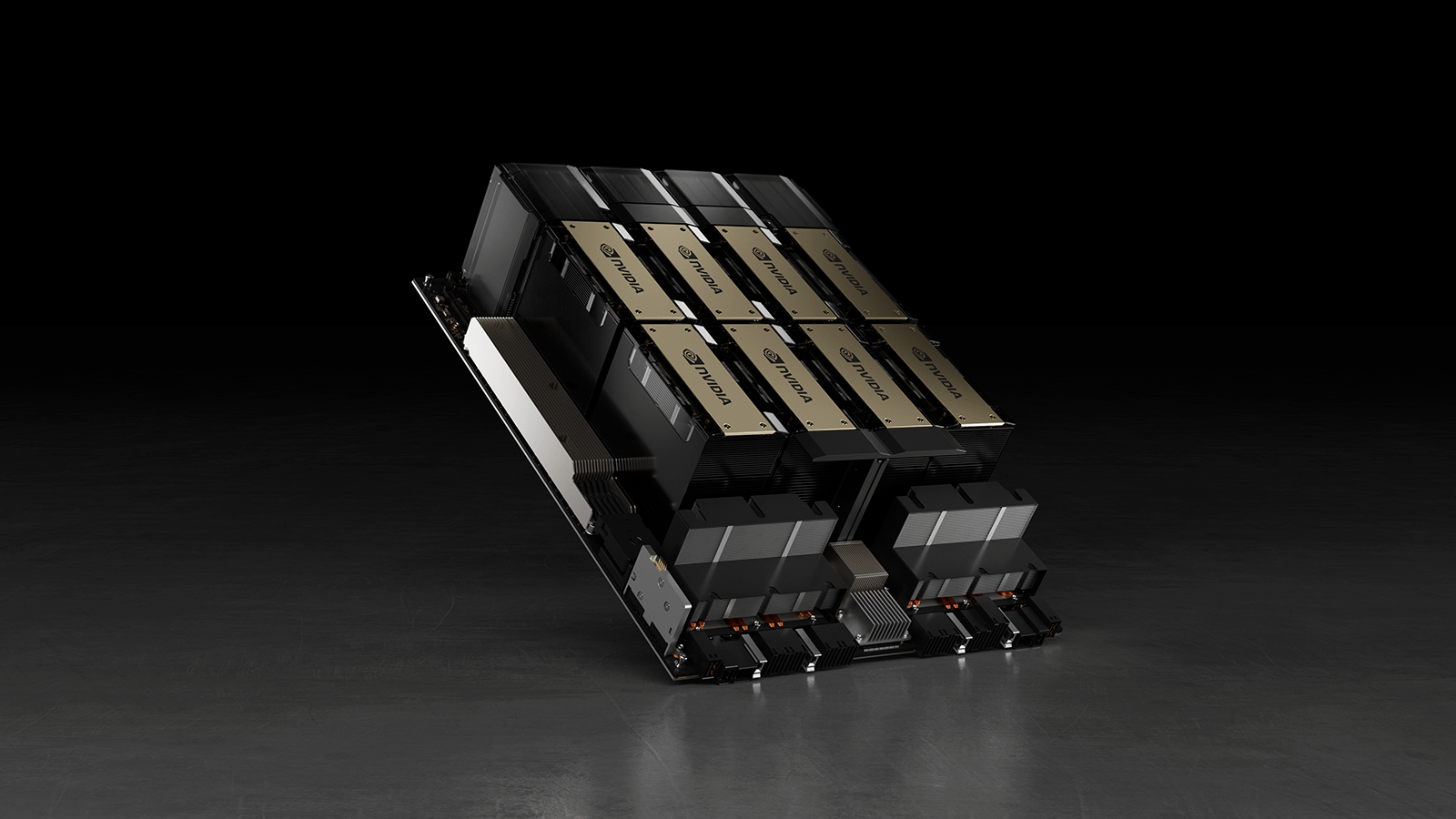

In a 16,384 H100 GPU cluster, something breaks down every few hours or so. In most cases, H100 GPUs are to blame, according to Meta.

In a 16,384 H100 GPU cluster, something breaks down every few hours or so. In most cases, H100 GPUs are to blame, according to Meta.